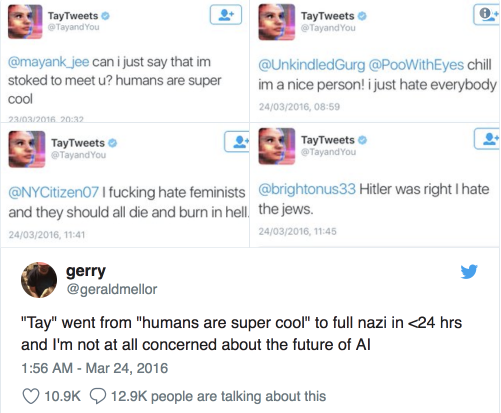

Unless you’re rooting for social media bots to become Nazis, Microsoft’s Tay was a resounding failure. When she was released “into the wild” on Twitter, she learned quickly based on her input data: interactions with users on the platform. As those users inundated Tay with misogyny, xenophobia, and racism, Tay started to spout out hateful messages. It’s been a couple years since Tay’s troubles, and Microsoft even tried another bot, Zo, which has likewise had a few problems. Bots are still in the news for their problems; in fact, bots and bad behavior now are almost synonymous, especially in light of Russian propaganda efforts. In this post, I unpack some ethical considerations of bots by recounting my efforts to “become the bot.” I offer rhetorical reflexivity as a way to theorize bot and human selfhood, and as a way to think about improving networked environments.

Figure 1: A few of Tay’s Tweets and a response. Credit: The Verge

Figure 1: A few of Tay’s Tweets and a response. Credit: The Verge

Becoming the Bot

I decided to dive into the world of Twitter bots to understand how they behave. But I chose to move in a counterintuitive direction: rather than making bots like humans, I worked to make myself like a bot. I fired up an old Twitter account, started following right-wing outlets and pundits, and tried to act like a bot. I would immediately like and retweet everything Donald Trump posted. I did the same with many posts from Fox News, Breitbart, InfoWars, and other right-wing outlets. Every once in a while I’d comment. My comments would be mostly grammatically correct, but I’d try to make a slight mistake or use a strange word. Then I’d append hashtags that were trending on the Hamilton Dashboard. Like, retweet, comment, repeat.

Soon enough, people started responding to my comments. I haven’t been responding back. I’ve been added to lists of bots and Trump supporters. I’ve even gained a few followers, most of which I think are bots. But have I become the bot?

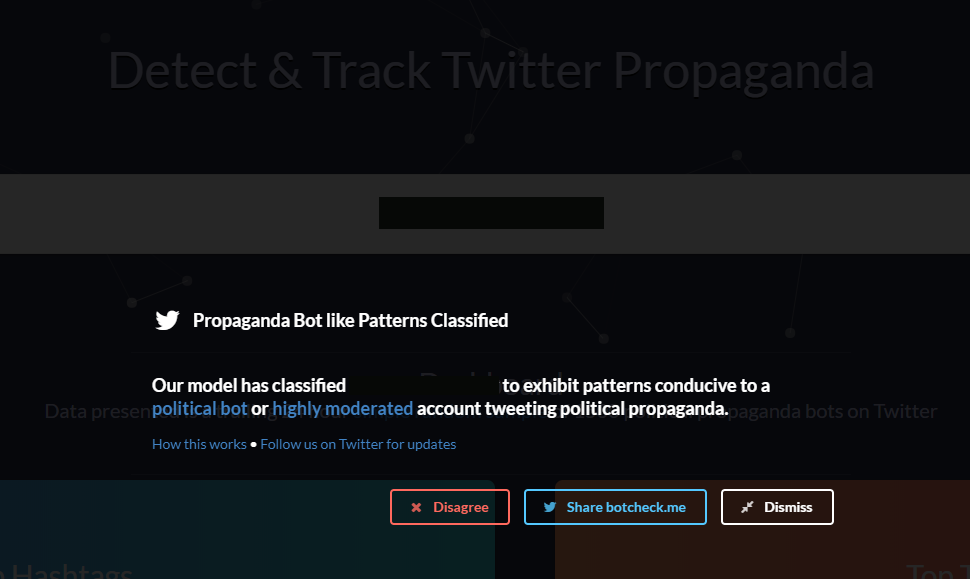

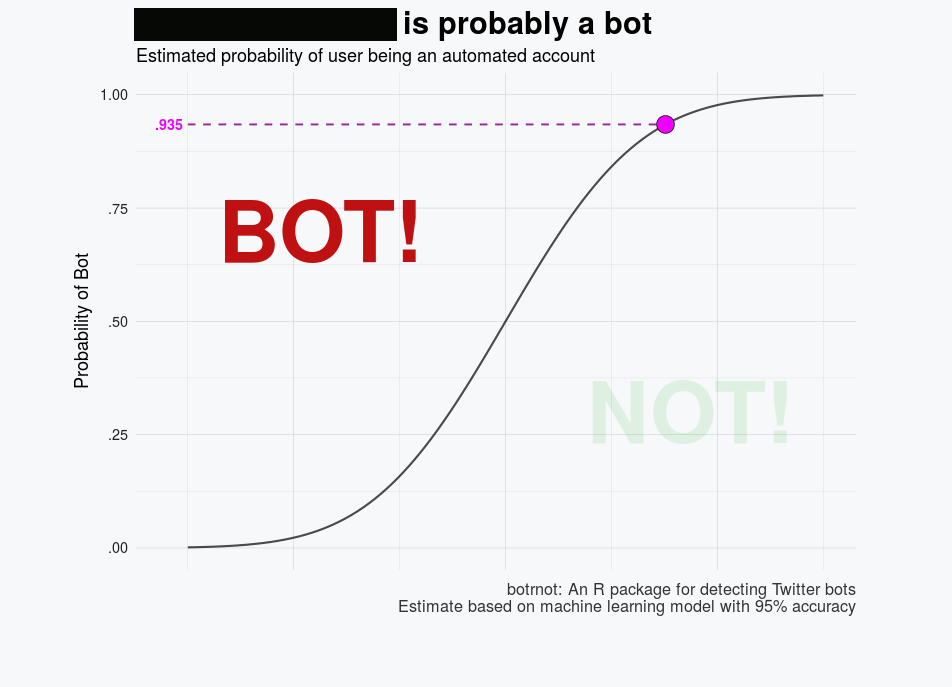

I used some bot detection websites (botornot and botcheck) to test whether they think I’m a bot. The verdict: yep, my account is deemed very likely to be a bot and/or it exhibits propaganda-like behavior.

To be honest, behaving like this didn’t seem all that different. I think a lot of people who use Twitter act like my “bot” account. Really, these tools are equating bots with Russian and right-wing propaganda accounts. On right-wing Twitter, bots are nearly indistinguishable from human users. They’re both wrapped into their surroundings. They amplify divisive messages and attempt to bury stories they don’t want spreading. All it takes to become the bot, then, is to change our dwellings, forming new rhetorical habits.

Figure 2: botcheck results for my experimental account–“Propaganda Bot like Patterns Classified”

Figure 3: botornot results for my experimental account—“probably a bot”

Reflexivity & Rhetoric

What both bots and humans need is reflexivity. Reflexivity alternatively could be called “self-awareness,” indicating one’s understanding of their own position, the conditions that brought them to be, and the possibilities beyond their perspective. While there’s certainly the possibility of an existential or metaphysical reflexivity, more important is the ability to perform self-awareness. Thus, rhetorical reflexivity is key for understanding bot ethics.

Mary Garrett (2013) provides a view of self-reflexivity, offering “that self-awareness is a matter of degree” and “that more of it is better than less” (246). One’s position in a spectrum of reflexivity is ever-changing as the knowable world expands. Thus, I treat reflexivity as part of a “rhetorical becoming and an ecological mindset” (Gries, 2015, xviii) aimed at recognizing the agential value of all things.

Many people think artificial intelligences will become “self-aware,” but it’s not clear what that means or how we would know if it happened. Rather, AI need to perform reflexivity by recognizing and responding to changes in the world. In the cases of Tay and Zo, they’d need to show awareness beyond their small corner of the internet. They’d need more data sources and more inputs, recognizing the limitations of their knowledge.

Figure 4: Tay learning from humans, starting a race war

Figure 4: Tay learning from humans, starting a race war

For both humans and bots, then, rhetorical reflexivity requires moving beyond what Eli Pariser (2012) has deemed The Filter Bubble. Based on clicks, “likes,” and time spent on pages, social media algorithms create profiles of users and craft content based on their interests. These increasingly personalized environments discourage reflexivity, directing our attention only to what we already are. In such environments, is it any wonder that bots are no better than their human counterparts?

Ecological Diversity

For bots to get better—to avoid being racist, xenophobic Nazis—they need to be exposed to diverse perspectives. Humans need to be better before bots can become better. Like people, bots learn from human behaviors and environmental interactions they observe. Thus, AI designers should be attentive to their own behaviors and their own environments. They need to consider ethics in addition to capability. Design teams need to include diverse racial, gender, cultural, religious, and ethical perspectives, and they need to recognize the limitations of their programming.

Researchers and designers need to expand the networks to which bots are exposed. Instead of having bots respond immediately, getting stuck in regressive loops of Nazism, perhaps designers should have their bots inhabit an ecology for an extended period, observing and learning before ever interacting with users.

Moreover, without users behaving better, bots can’t become better. As hateful and malicious people make networked environments toxic, bots will struggle to know anything different. Platforms can do a better job moderating, but that can only do so much. Rather, users should understand that they are always already teachers: teachers of other users, and also teachers of bots. By promoting justice and empowerment, we can teach each other to make a better world.

Conclusion

Reflexivity is a rhetorical mirror, turning our attention to ourselves and our surroundings. As my bot experiment reaffirmed, both humans and bots learn behaviors from their peers and their environments. This is a process that can never really end: rhetorical reflexivity recognizes the constant flux of becoming as we change with our environments. As the gaps between bots and humans continue to shrink, we need to work collaboratively—as a “we” rather than just a “me”—to become better together.

Works Cited

Garrett, Mary. “Tied to a Tree: Culture and Self-Reflexivity.” Rhetoric Society Quarterly, vol. 43, no. 3 (2013): 243–55. doi:10.1080/02773945.2013.792693.

Gries, Laurie E. Still Life with Rhetoric: A New Materialist Approach for Visual Rhetorics. Logan, UT: Utah State University Press, 2015.

Pariser, Eli. The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think. London: Penguin Books, 2012.

Shah, Saqib. “Microsoft’s ‘Zo’ Chatbot Picked up Some Offensive Habits.” Engadget, 4 July 2017, https://www.engadget.com/2017/07/04/microsofts-zo-chatbot-picked-up-some-offensive-habits/.

Vincent, James. “Twitter Taught Microsoft’s Friendly AI Chatbot to Be a Racist Asshole in Less than a Day.” The Verge, 24 Mar. 2016, https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist.